Neural style transfer is computer vision technology that recomposes an image’s content in another’s style using a neural network. If you’ve ever envisioned how a photograph might appear if it were created by a famous artist, neural style transfer is the computer technology that makes it possible.

So how does neural style transfer function in practice? What are the various techniques, possible benefits, and drawbacks?

If you’re a software engineer contemplating implementation, a developer who wants to learn more, or an artist who is interested in utilizing style transfer in their work, this is the article for you! All of your questions and more will be answered in this post.

What Exactly is Style Transfer?

Table of Contents

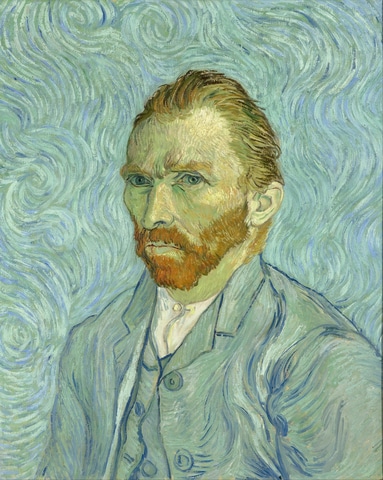

Style transfer is a computer vision approach that combines two pictures — a content illustration and your style reference image — so that the output image keeps the essential components of the content image while appearing to be “painted” in the style reference image’s aesthetic.

A selfie, for instance, might serve as the image’s content, while Salvador Dali’s painting would serve as a style reference. A self-portrait that resembles a Salvador Dali original would be the end result! You can check out some examples in my Salvador Dali Style Guide.

Types and Modes of Style Transfer

The topic of style transfer, i.e. non-photorealistic rendering, has been explored for decades, however neural style transfer (NST) itself was invented in 2015 with the Gatys paper, “A Neural Algorithm of Artistic Style.” This technique performs a style transfer using neural networks. [1]

Neural style transfer involves a pair of source images, an original image, and an artistically expressive interpretation of that input image. This training task is performed using a typical supervised learning approach. Once the network is trained, it becomes possible to apply the technique to new original photos. It’s even possible using videos! [2]

Generally, there are two types of style transfer. One is called a ‘photorealistic’ style transfer, where the content and style images are both ‘real’. The goal of a photorealistic transfer is to improve or augment the original content image in some way. The second type is called an ‘artistic’ style transfer, where the artistic style of some painting or image is transferred to the content image.

Implementing Neural Style Transfer

Let’s take a closer look at how style transfer works now that we understand what it is, how it differs from other forms of style transfer, and what it may be used for.

In recent years, what’s feasible with neural style transfer has advanced due to the proliferation of deep learning techniques and increased performance and support of GPUs during the training process.

One important factor for training neural networks for style transfers is having a large enough set of training data. Want to learn more about quickly increasing the size of your training set? Check out my post about Data Augmentation!

Deep neural networks are used by NST to power these style transfer processes. A style transfer network is actually a type of convolutional neural network (CNN) only applied to a different task than image recognition and classification. [3]

Just as each layer of a CNN extracts different features, the style transfer network is extracting the ‘content’ and the ‘style’ of the two images. The simplest form of this technique uses optimization and balances the output of two separate cost functions.

Specific layers learn to retrieve image content (such as an animal’s shape or a car’s location). In contrast, others adapt to focus on textures such as the individual brushstrokes of an artist or the fractal patterns found in nature.

If you are interested in how natural fractal patterns are expressed in art, check out my deep dive post!

The first function is called the ‘content loss’ function, and the second function is called the ‘style loss’ function. By finding a transformation which optimizes both functions, the content and style can both be preserved during the transfer. If you want to know more about the specifics of the loss functions, check out my post about loss functions.

The key innovation of the Gatys technique was that a CNN allowed these two concepts to be decoupled mathematically. [1]

The content information is represented as ‘feature maps’. These are matrices which contain the edges or any higher level features which may make up the image.

For example, if the network was recognizing cars, the low level feature map may contain the edge separating the tire from the hubcap.

A higher level feature map may contain something like a wheel or a door. The highest level would map the features of wheel/door/window to the ‘car’ feature.

The style information is represented as matrices called ‘Gram matrices’. These matrices are symmetric because they are covariance matrices of two feature maps from the style image.

By checking whether there is variance and correlation between the features, information is captured about which features are present or absent together. The set of these Gram matrices at each layer is the ‘style’ information.

History of Neural Style Transfer

Let’s look at the timeline:

- 2012: Interest in CNNs increases from AlexNet winning the ImageNet Large Scale Visual Recognition Challenge.

In this high profile image recognition challenge, a CNN called AlexNet outperformed the previous state of the art method of image recognition by a factor of 10%. This sparked a flurry of interest in CNNs – paving the way for neural style transfer. [4]

- 2015: Gatys proposes the neural style transfer algorithm.

This method was discussed in How Exactly Is Style Transfer Implemented?On the other hand, neural style transfer’s early iterations were not without flaws. The work was approached as an optimization issue which took thousands of iterations to complete the transfer, and was computationally very slow. [1] Researchers needed a more rapid neural style transfer method to address this inefficiency.

- 2016: A paper by Johnson and their team introduced a faster style transfer using a feed forward neural network.

This method outperformed the Gatys method and produced results incredibly quickly, allowing for style transfer in real time. [5] Fast style transfer can alter any picture in a single, feed-forward step. Instead of thousands of iterations through the network, trained models can restyle every content image with just one training.

- 2016: Ruder’s research group applied neural style transfer to videos.

The paper presented new cost functions for the optimization process. Primarily, a temporal constraint so that the style does not change from frame to frame of the video and it stays consistent. [8]

- 2017: Li’s team publishes a paper about understanding the style information.

This paper examined the Gram matrix and why it captured the style information. This research unlocked new valid alternative methods of capturing the style. [6]

- 2017: Huang’s team publishes a paper about arbitrary style transfer which is also in real time.

This method allowed for the style to be altered arbitrarily without retraining the network. This was a big development because previous methods were trained to a specific style. [7] As a result, modern style transfer algorithms can even adapt to imprint numerous styles using the same model, allowing a single raw content image to be altered in various ways.

- 2018: Style transfer is created for audio and music.

This method created a spectrogram (an image containing the sound information of an audio file) and transferred the style to another spectrogram. [9]

As you can see from the timeline, the field of neural style transfer was invented only in the last decade, but has advanced rapidly.

Neural Style Transfer in Art

Due to these advancements in the current technology, practically anybody can experience the satisfaction of producing and sharing a creative, stunning masterpiece.

This is where style transfer’s transforming power rests. Artists may give their artistic aesthetic to others, enabling new and unique depictions of artistic trends to coexist with original classics. We see the relevance of neural style transfer showing out in the professional art world, in addition to encouraging millions around the globe to explore with their own creative outlets.

Neural style transfer can now be deployed to both captured and live video, thanks to the continuous advancement of artificial intelligence (AI)-accelerated technology. This new feature offers up a world of possibilities in terms of design, content creation, and the creation of creativity tools.

Want to use a style like Van Gogh’s in your own neural style transfers? Check out the posts in the Style Guide series:

- Express yourself with the Van Gogh Style Guide.

- Peek at Picasso’s paintings with the Picasso Style Guide.

- Go on an abstract adventure with the Jackson Pollock Style Guide

- Get an impression of Monet’s masterpieces in the Monet Style Guide

- Take a Rothko Retrospective with the Mark Rothko Style Guide

- Journey through the symbolic depths with Frida Kahlo’s Style Guide

- Discover Dali’s surreal style with the Salvador Dali Style Guide

Want to learn more about how style transfers and neural networks work?

- Don’t lose out on my article about what content loss is!

- Learn about Dropout and preventing overfitting during training

- Improve your training data using Data Augmentation techniques!

- Want to learn more about the influential VGG network? Check out my post covering why VGG is so common!

We can now see how neural style transfer can be used in a variety of ways as a result of this evolution:

- Editors of photographs and videos

- Collaboration between artists and the general public

- Art for sale

- Gaming

- VR (virtual reality)

Of course, this isn’t a comprehensive list, but it covers some of the most critical ways that style transfer influences the future of the arts.

Variations of Model Architecture

There are far too many different neural network models to describe them all in this post.

I’ll spotlight a handful of variations that allow different aspects to be varied during the style transfer:

- Single style: an individual transfer network is engaged for each style desired

- Multiple styles: an algorithm that allows users to mix and match numerous styles with a singular system

- Arbitrary styles: this system can learn to pull and apply various techniques to an input image in one step

Extensions and optimizations:

- Stable style transfer: enables users to replicate a stylization for an object moving through multiple frames with no interference (video).

- Color preservation: utilizing specific artistic elements, such as brushstrokes, while preserving the original palette of the input image.

- Photorealistic style transfer: enables users to maintain the photorealism of two separate images when combined into one.

Looking for some inspiration of an eye-catching style to use in your transfers? Check out the Jackson Pollock Style Guide.

Applications and Uses of Neural Style Transfer

Let’s look at how neural style transfer can be used in editing videos and photos, gaming, commercially sold art, and virtual reality (VR).

Editing Videos and Photos

The use of neural style transfer in video and photo editing software is one of the most prominent examples. The option to add famous art motifs to photographs and video clips intends to give these kinds of creation tools unparalleled power, from sharing stylish selfies to supplementing user-generated videos and beyond.

Style transfer models may readily be incorporated on edge devices, such as mobile phones, because of the performance and speed of contemporary deep learning algorithms, enabling apps that can analyze and modify video and photos in real time. This means that high-quality video and photo editing software will be more readily available and simpler to use than it has ever been.

A slew of great platforms currently exists that include neural style transfer in their toolkits.

Gaming

Gaming developers have utilized neural networks to instantly recompose virtual worlds with color palettes, textures, and themes from an infinite spectrum of aesthetic art styles using an in-game fast style transfer.

Commercially Sold Neural Network Art

We’ve only recently entered an era of artificial intelligence (AI) creations, including everything from literature to artwork to music.

Neural style transfer aims to revolutionize the way we think about art, what uniqueness means, and how we show art in the real world, whether it’s artwork offered at a high-end event or up-and-coming artists seeking new ways to communicate their aesthetics with the public.

Style transfer could be used to produce repeatable, high-quality graphics for office spaces or large-scale marketing campaigns. These are only a few examples of how style transfer could alter our perceptions of art’s commercial value.

VR (Virtual Reality)

Virtual reality has sparked curiosity in researching what’s possible with neural style transfer, quite like gaming, where interactive digital worlds are at the heart of the user experience.

While neural style transfer applications in virtual reality are still in the early stages of development, the possibilities are fascinating and promising.

Final Thoughts

I hope that the preceding overview helped you grasp the fundamentals of neural style transfer and how it may be applied in the real world. More answers, however, often lead to more inquiries. Stay tuned!

I sincerely hope that you will continue to dive deep into understanding all you can about neural networks, neural style transfer, and everything it entails.

For more information on other types of neural networks, check out my tutorial on Bayesian Neural Networks and how to create them using two different popular python libraries.

Check out some other posts in the Style Transfer Category to learn more on this endlessly terrific topic.

Get Notified When We Publish Similar Articles

References

- “A Neural Algorithm of Artistic Style.” Gatys et. al., Werner Reichardt Centre for Integrative Neuroscience, 2 September 2015, https://arxiv.org/pdf/1508.06576.pdf

- “Interactive Video Stylization Using Few-Shot Patch-Based Training.” Texler et. al., Czech Technical University in Prague, 29 April 2020, https://arxiv.org/pdf/2004.14489.pdf

- “VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE-SCALE IMAGE RECOGNITION.” Simonyan et. al., Visual Geometry Group, University of Oxford, 10 April 2015, https://arxiv.org/pdf/1409.1556.pdf

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “Imagenet classification with deep convolutional neural networks.” Advances in neural information processing systems 25 (2012), https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

- Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. “Perceptual losses for real-time style transfer and super-resolution.” European conference on computer vision. Springer, Cham, 2016, https://link.springer.com/chapter/10.1007/978-3-319-46475-6_43

- Li, Yanghao, et al. “Demystifying neural style transfer.” arXiv preprint arXiv:1701.01036 (2017). https://arxiv.org/pdf/1701.01036.pdf

- Huang, Xun, and Serge Belongie. “Arbitrary style transfer in real-time with adaptive instance normalization.” Proceedings of the IEEE international conference on computer vision. 2017. https://arxiv.org/pdf/1703.06868.pdf

- Ruder, Manuel, Alexey Dosovitskiy, and Thomas Brox. “Artistic style transfer for videos.” German conference on pattern recognition. Springer, Cham, 2016. https://arxiv.org/pdf/1604.08610.pdf

- Verma, Prateek, and Julius O. Smith. “Neural style transfer for audio spectograms.” arXiv preprint arXiv:1801.01589 (2018). https://arxiv.org/pdf/1801.01589.pdf